User Tools

Table of Contents

The Blog

Comparing AMD GPU Moonlight+Sunshine Streaming Using H264 AVC vs H265 HEVC

To my eyes, in certain conditions, H.264 looks better than H.265 when running Sunshine and Moonlight from my desktop to laptop.

Desktop is running a R9 7900X with RX 6700 XT. Laptop is a R5 5650U.

H@VC should almost always outperform H264, right? Except to my eyes, sometimes, H264 looks better…

Found two causes:

a) h264 remains sharper (but with blocking artifacts), whereas h265 is overall higher quality but blurrier thanks to deblocking

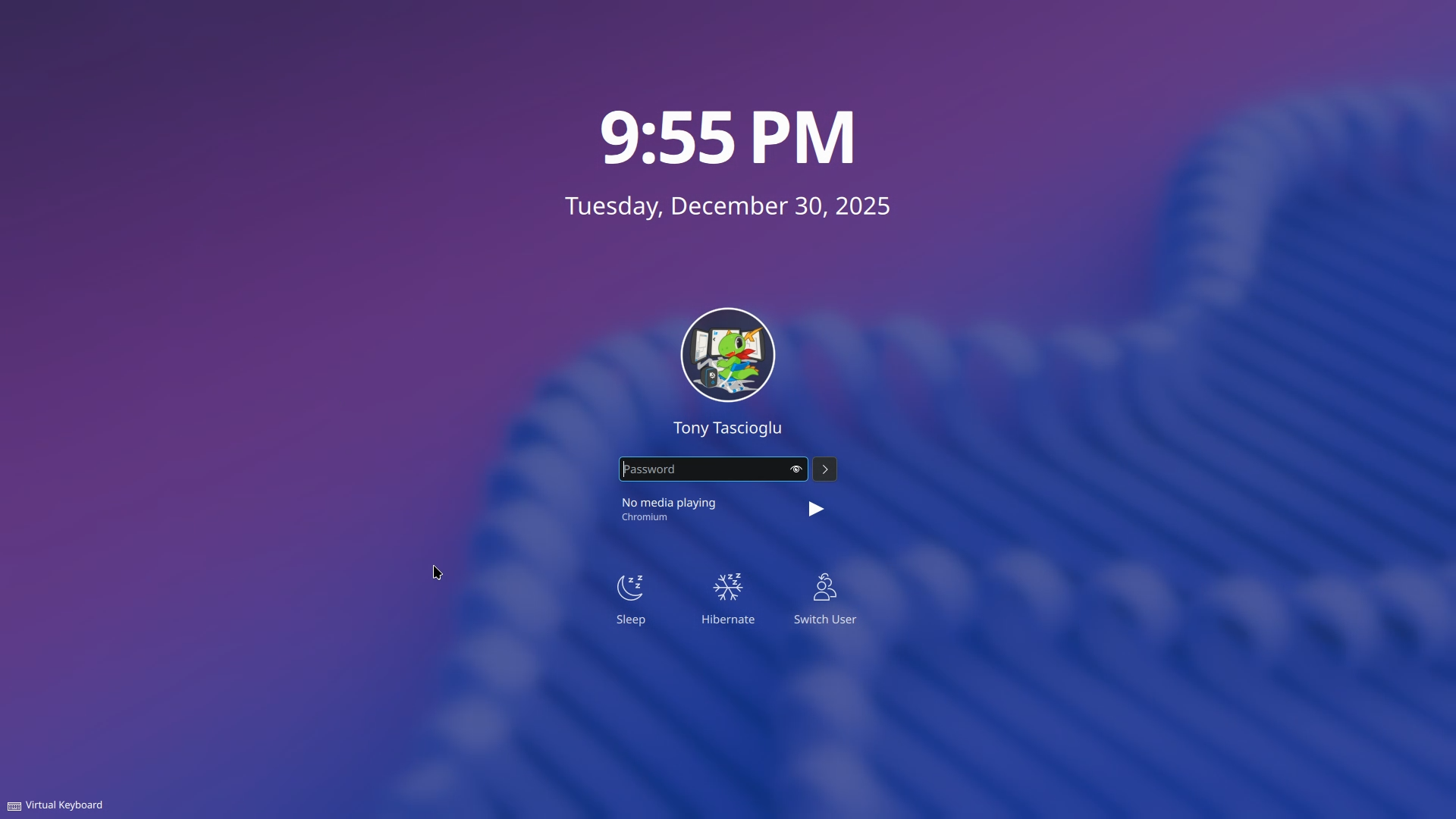

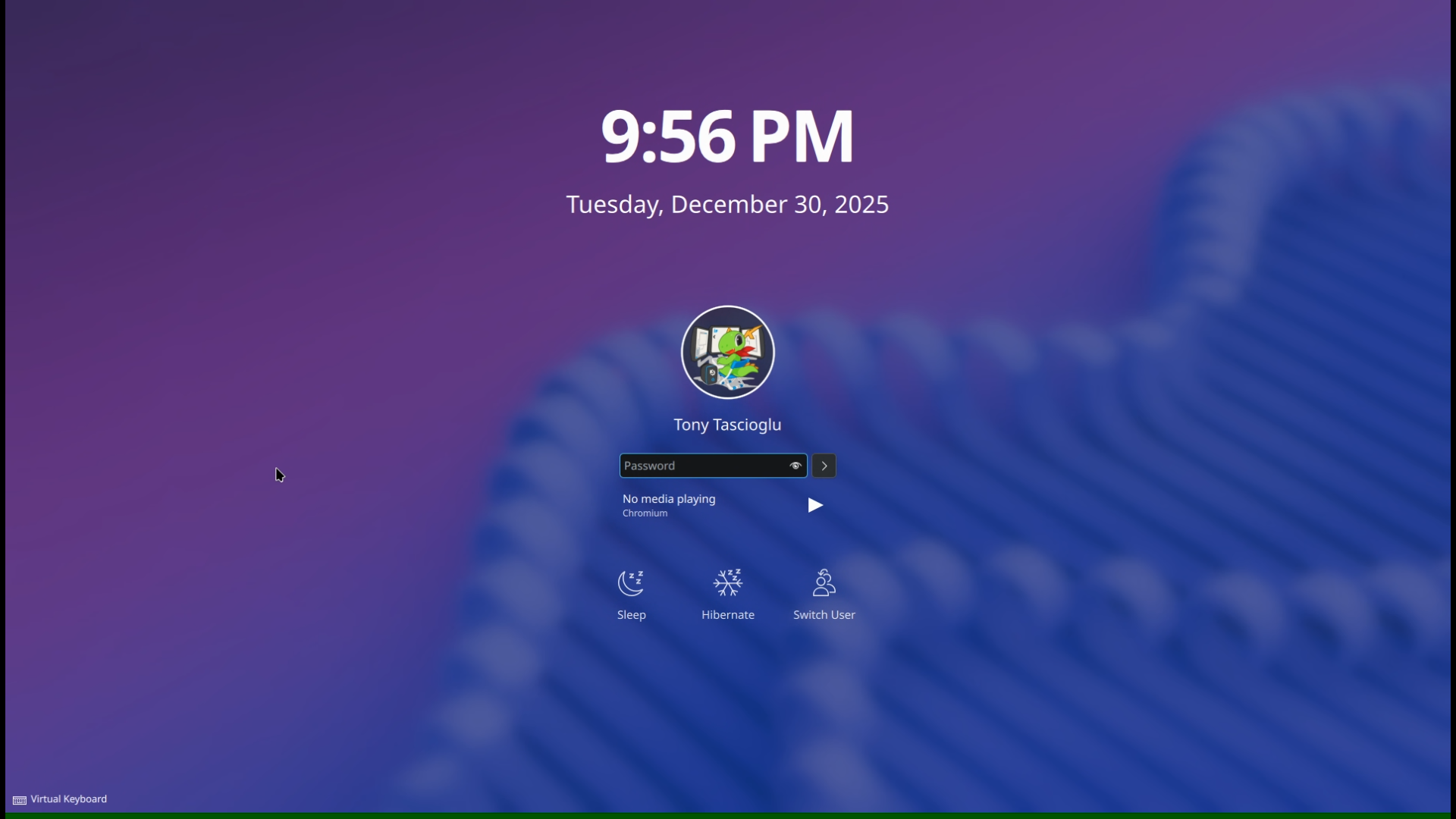

b) look at this same image, captured at 1920×1080, in h264 and hevc of streaming my computer:

Look at the text! (I know this is a poor example, I should have put more 1px wide lines and text)

Notice how the 265 has the green bar at the bottom?

h264 is sending real 1920×1080, so it is displayed with no scaling hevc for some reason has the green bar on the bottom. this messes up the aspect ratio, and thus the whole image is scaled, resulting in all 1px lines and text being blurry

that's contributing to why I keep thinking hevc is blurrier - because it is. you can't have crisp text on bilinear scaled content

so wtf is the green bar? is this like the AMD 1920×1082 issue on AV1? where the hw encoder only outputs blocks of certain sizes?

Both are running 1080p60 at 4 Mbit/s. Low, but fine for my non-gaming use.

Tony's Proxmox Reinstall and Migration Guide

Use case

There are many guides for doing “migrations” or “reinstalls” of proxmox online, sometimes with backups, sometimes with live copies etc.

My needs

I have an existing Proxmox 7 server running with a single boot SSD using LVM for PVE. I have additional SSDs for hosting my LXC and VM images, and hard drives that are bind-mounted into the LXCs where bulk storage is needed (eg: Immich and Nextcloud data directories).

While this has backups of the containers, (and the bulk storage is RAID Z1), I am a single SSD failure away from the system not booting, which I consider catastrophic when it is a 6 hour $1000 flight away (thank Air Canada for that one).

I would like to reinstall PVE on this system, using two SSDs with ZFS RAID 1 for PVE, as 1) ZFS fits in far more with my current setup handling snapshots seemlessly, and 2) it gives me redundant boot and EFI partitions should one of the SSD's outright fail.

What is different

- I have NO quorum, I use a single instance of PVE.

- I can NOT do a live migration, as I have a single host, and will be reusing the hardware.

- I do NOT want to install all my LXCs and VMs from a backup - they live on a separate SSD I will not be wiping, and I would like to reuse those pools as is.

- I do NOT want to backup and restore PVE, I am changing SSD configurations (1 drive LVM to 2 drive ZFS)

- My old setup had many levels of jank during a PVE 7 to 8 upgrade, avoid re-using unnecessary old configs

Again, what I want, is to reinstall PVE onto a new SSD with a new filesystem, reuse my existing VM files and pools already on the drives. No backup/restore of LXCs. Just a simple, take everything, plunk it into new PVE.

The solution

Turns out the solution is actually relatively simple.

Since I tried a few things first and re-did this process twice, let me put some thoughts:

- Proxmox reads the configs for your VMs and LXCs from /etc/pve/nodes.

- I setup a full Proxmox Backup Server as an LXC another PVE instance (different location - not clustered)

The easy way

- Make backups of everything you care about.

- IF YOU WILL BE RE-USING SSD'S, MAKE SURE YOU DD A BACKUP OF THEM!

- This one saved me and allowed me to repeat this procedure twice.

- Grab the important config files you will need from the old host

- /etc/pve/hosts

- Ignore the keys and stuff, it'll cause pain and misery

- /etc/wireguard/wg0.conf

- If you rely on wireguard and want to be lazy and re-use old keys (not best security practice)

- /etc/fstab

- If you have non-standard mounts (I had an older btrfs HDD not in an zpool or lvm)

- /etc/exports

- If you have custom NFS exports to go from PVE over to VMs (LXCs just bind mount)

- /etc/network/interfaces

- If you have custom networking or VLAN setups

- ~/.ssh/authorized_keys

- Any special configs you have.

- Swap out the SSDs for the new ones, prepare proxmox installation media

- Install Proxmox fresh on your two new SSDs!

- You should be able to boot into your new system

- Bring your system roughly in line with your old setup. For me, this included

- Re-setup network interfaces and bridges the way they were

- Restore my fstab, wireguard, exports

- Wireguard needs apt install wireguard wireguard-tools

- systemctl enable wg-quick@wg0.service –now (start your tunnel at boot)

- Re-import your storage devices (eg: my VM/LXC SSDs, data HDDs)

- Make sure they are all detected under disks!

- For my data HDD zpool, this was two steps

- zpool import tonydata

- causes it to show up in node → disks

- add it in the proxmox UI

- datacenter → storage → add new → zfs

- For my LVM SSDs, this was 1 step as they were already listed under disks

- add it in proxmox UI

- datacenter → storage → add new → lvm thin

- For my old BTRFS HDD, this was 3 steps

- Restore my old fstab (verifying UUID stayed the same)

- mount -a

- datacenter → storage → add new → btrfs

- Remember to choose the types of storage this is (backup and data, not disk images in my case)

- Export my NFS pools (this one I forgot to do until my VM was mad)

- apt install nfs-kernel-server

- restore /etc/exports

- exportfs -av

- Note, if you miss the above, it's probably not that bad, you'll be warned if things fail to start, it's iterative.

- Restore /etc/pve/nodes/pve/lxc and /etc/pve/nodes/pve/qemu-server

- You should now see your left bar populate back up with all your past lxc and VMs

- Try starting them, maybe they work, maybe they error, if so look back to above.

That was it. Didn't end up using any of my backups from the backup server (surprisingly). Only used losetup on my dd to grab config files for networks and such retroactively to remember names and addresses.

Traps

- Do NOT, try to blindly restore your /etc/pve

- Doing so will leave your /etc/pve in a bad state with keys that are lingering

- You will NOT be able to log in and will need to systemctl stop, clear /etc/pve and such

- Connection error 401: permission denied - invalid PVE ticket

- See my above point

- Do not try to RSYNC into /etc/pve

- It will fail. It is a FUSE mount and will refuse the temp files rsync makes.

- Use scp or an rsync flag to go direct to output file.

- Do NOT try to restore your PVE host from Proxmox Backup Server

- Restoring the backup will yield errors with file overwrites

- Allowing overwrites will break your configs in two and fail on /etc/pve

- NOTE: proxmox-backup-client doesn't even backup /etc/pve by default (it only does root) so it's no use if you forgot that anyway

- PROXMOX BACKUP SERVER IS A RED HERRING!!

- In fact, if you don't need to back up and restore VMs, and you've taken a DD of your old SSD, you don't need it.

- it is excellent software for offsite backing up items, just not my current use case

- If it's not obvious, if you are using drive letters, like /dev/sda, sdb, YOU NEED TO STOP.

- Please just use UUIDs, or heck even labels. After you reinstall, things WILL be shifting!!

In fact, let me do a whole segment on this

Proxmox backup server?

There seems to be some confusion with how this works, as a backup server can be added in two ways.

- Datacenter → Storage

- This can be used for backing up the LXC and VMs on your system.

- Note: VM and LXC items backed up this way will keep some config data without /etc/pve, specifically ram and cpu amounts

- This can not be used to backup PVE itself

- proxmox-backup-client

- This can be used to backup PVE. by default, backs up a partition of your choosing

- If you backup /, make sure you also include /etc/pve which is a fuse mount

- If you already DDed the drive, you can always losetup and mount the disk image instead of using pxar.

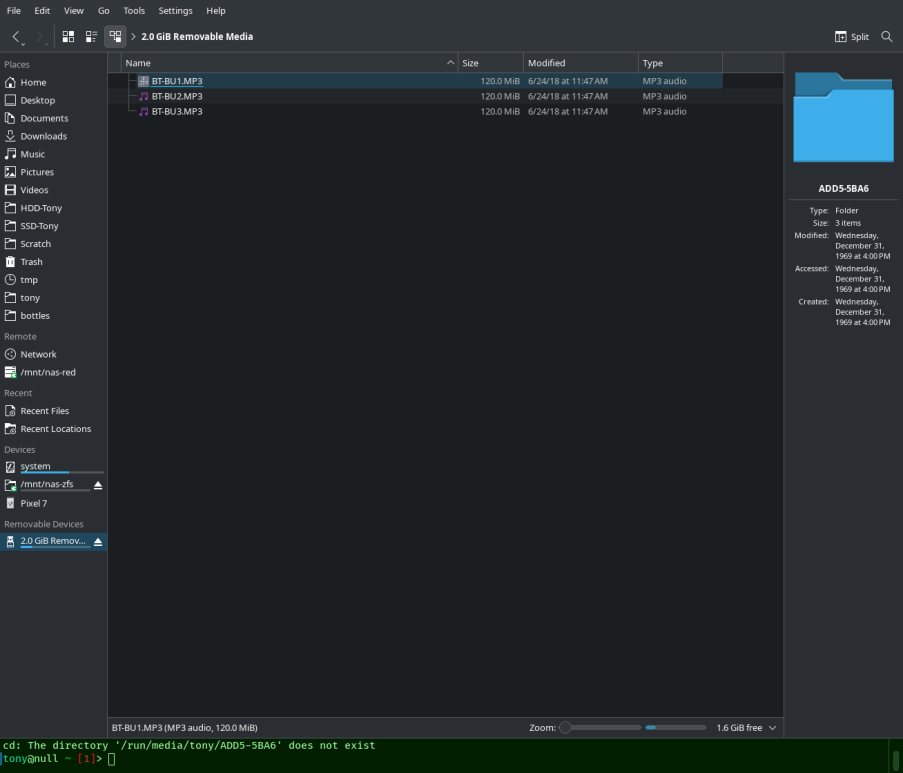

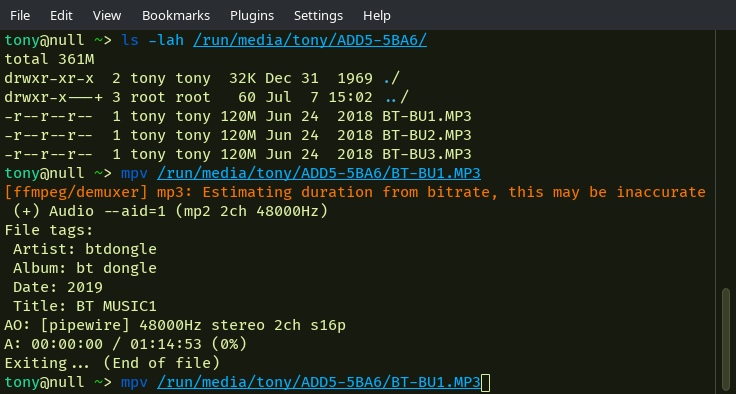

Weird USB Bluetooth Music receivers

My USB powered bluetooth audio receiver STARTED SHOWING UP AS A MASS STORAGE device.

I DIDN'T EVEN KNOW THAT THING HAD DATA LINES?!?

Did I get rubber-duckied by a BLUETOOTH RECEIVER?

WAIT YOU CAN MOUNT THE STORAGE DEVICE?!?

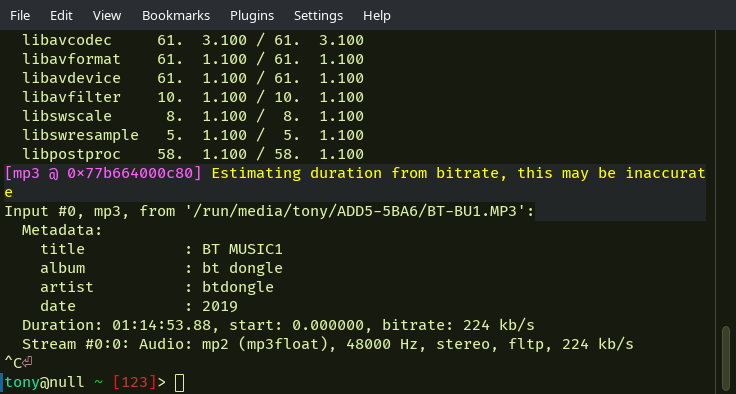

WTF IF YOU PLAY IT, THE FILE HAS LEGIT MP3 TAGS

OH SHIT I Think this is for it to work on crappy stereos that just play MP3 from a flash drive!!!

To adapt them to work with bluetooth

hm I don't hear anything and the mp3 file exits though

so I guess you need a dumber player that doesn't try to seek in a file

WAIT I THINK I KNOW WHAT THE OTHER FILES ARE

YOU CAN'T PLAY THEM, BUT IF YOU OPEN IT, IT TELLS THE PHONE TO GO TO THE NEXT SONG

so it's used to detect when you pressed previous/next!!! and from that, it signals to the bluetooth device to go back/forward

who the f*** makes this ASIC?!? There's no way this thing is economies of scale

What is wrong with the 2023 League worlds opening ceremony?

The original league worlds opening ceremony video from this year has very many technical quirks. Here is what I noticed and spent a a few (prolly a dozen) hours fixing.

- The audio on the YouTube upload is unexplicably in mono and sounds notably worse than the Twitch livestream which was in stereo.

- Likely destructive interference when collapsing stereo to mono?

- The whole 'scripted' section of the opening is actually running at 29.97 fps, and interpolated to 60 but in the worst way.

- Half of their cameras are running at 29.97 which means every frame is duplicated for the stream which is 59.94 - this on it's own is fine. Most of the side-angle cameras are 29.97.

- The other half of the cameras are also running at 29.97, but are interpolated to 59.94 THROUGH FRAME BLENDING?!?

- This means that every other frame is literally a 50/50 blend of the two frames before it. This is what causes that motion-blur like feel, and you can instantly tell which cameras they are on.

- It might be the cameras that also do AR are doing blending? unsure.

- In fact, the main stage camera and the cable cam blend on opposite frames - eg: one has good even frames, blended off, the other is vice versa. This might be because of an internal delay? I'm not sure. Don't get me wrong 1080p29.97 is fine, although odd since sports is usually 720p60 or 1080i60.

- The version on YouTube took a video that had TV black levels, mapped it to PC, then was converted to TV again, resulting in crushed black and white levels losing detail

- The version on Twitch has the audio and video about 100 ms out of sync, this is most noticeable with the fireworks at the end of gods not being in line with music. Interestingly, the audio and video ARE synced correctly on YouTube version.

- TV (and most video formats) uses 16-235 to express black-white instead of 0-255, whereas PC is 0-255. So, if you take a 16-235 feed, map it to 0-255, then crop it into 16-235 again, you effectively increase contrast and reduce your dynamic range, that is what seems to have happened on YouTube.

- Another odd quirk: some of the camera angles are different between the YT and Twitch versions? Either these were cut after the fact, or it was filmed weird? Not sure, I can upload a side by side comparison if anyone is curious. Notable on some audience and a few side angle shots.

- Also if you watch the side by side, depending on if you sync up the video or audio feeds, it almost looks like there are 2 video systems, and depending on which you sync up on, some transitions happen 100 ms before or after the other, with the other half being synced. I think the AR stuff is probably separate?

- About that frame blending, there are 4k60 audience camera recordings on YouTube, and if you watch those carefully, you'll notice the camera feed going to the video walls also does frame blending. This tells me that whatever system video was going into first was doing the blended frames, but again, only on half of the cameras.

So, to fix this

- I took the stream from Twitch, split the audio and video. For the video, I couldn't simply drop all odd or even frames because their different cameras interpolate different sets of frames.

- From my cut, the “main” stage camera had odd frames correct, and even frames blended, and the cable cam that is further away ad all even frames correct and odd frames blended.

- So I spent 3 hours clipping each camera angle, and dropping either the odd or even frames accordingly.

- I then re-synced the audio with that 100ms offset. This left me with a 29.97 fps video. That is my “cleaned up” version that I might upload, but, since the original was 60 fps, I wanted to interpolate it, but properly, not through frame blending.

- So I ran the whole thing through an AI video interpolation tool overnight on my GPU, and there you have it, 4k60 (technically 59.94). I'll add the 29.97 maybe as well.

Stuff I did not fix:

- The yellow jacket intro video is filmed in 24 fps, and brought up to 30, but not in a very consistent way, so it's staying as is.

- I can't change mic volumes, there isn't a lot, but you can pretty easily tell which parts are lip-synced anyway (listen for the reverb different, or when vocals sounds like they are stereo - eg: first 10s of vocals in gods sounds live, most of the rest is off the tape).

- I also can't fix their broken shadows on their AR characters.

Note: I do not own the music or video, those are from Riot Games. I'm simply uploading this in case anyone else wanted to watch it without the bizarre quirks of the version on YouTube.

If anyone from the Riot Games technical team is watching, I'm genuinely curious about how their production setup works and I would love to chat. (Or if you know someone who works with the technical team)

This is not meant to be a dunk on the performance, overall it was well put together, these are just 'quirks' I saw and couldn't unsee.

Here is the fixed version